Build System Analysis

A new project is ongoing and documented here: Build System Analysis:capstone2013 windows build A compare of build.pl versus a central makefile: Build System Analysis:build.pl versus makefile

The ongoing discussion to switch from svn to git is documented here: Build System Analysis:switch from svn to git

The recursive-make problem

Our current build system suffers from the well known “recursive make syndrome”. Large projects with a lot of directories and with many libraries and other build targets often use a central makefile that invokes “make” processes with the makefiles of all sub-directories. OOo has a variant of that procedure. The central make process is a Perl script and the central makefile is a multitude of build.lst files that define dependencies between directories, but at the end this doesn't make a fundamental difference.

A make based recursive build system has advantages that make it popular: it allows to build the whole system or only parts of it and it is easy to set up if you already have makefiles for the all parts of the project. But it also has many shortcomings that can become serious problems. They all stem from the same basic mistake: the make tool never sees the whole dependency graph of the project it shall build, each make process only knows a subset of the overall set of dependencies.

If a make tool knows the whole (and correct!) dependency graph, it can ensure a correct build by performing a post-order traversal of the graph. If the make tool never sees the whole graph, it can't take this perfect order but instead will use a worse or perhaps even incorrect one. This can result in build breaks or corrupt or incomplete builds. So manual tweaking is applied to the make process that (as all tweaking) might work under some circumstances but may break (or silently produce garbage) under others.

Not surprisingly our current build system shows the typical symptoms of this disease:

- It is hard to get the order of the recursion into the sub directories right. If a particular build order works, this may be just by luck. It is still possible that the build will break even without any code changes just by using different degrees of parallelization or building different parts of the project (something that is well known in our current build system).

- A full build always requires to enter all sub directories and invoke their make files, even if they won't have to do anything. This leads to long build times for small changes. Usually this is “fixed” by omitting dependency information or intentionally building less. If not done properly, this may lead to incomplete or corrupt builds so that at times a build “from scratch” or “from module X” is needed to repair. In any case it requires special knowledge of the developer who here takes over some of the duties of the build system.

- Inter-directory dependencies are very coarse (compared to the file-based dependencies that a complete dependency graph contains). Some changes cause a build that just builds too much (“incompatible builds”).

- This wrong level of granularity, that also can be seen as an inaccuracy of dependencies, prevents the build system from optimal parallel execution as the coarse dependencies segment the build process and create artificial boundaries. Dependencies should exist between files, not between directories.

- The same inaccuracy doesn't allow to continue building as much as possible in case of build errors. If a build error happens while building a particular target, every other target in the project that does not depend on the broken one still can be built. This works nicely if the dependency granularity is on the file level, it works much less efficient (or not at all) if it is on directory or “module” level. The ability to build as much as possible in case of a build error is especially important for builds that take several hours over night. It also can reveal more than one build breakage per build and so save even more time.

Analysis of the current build system

In our build system inter-directory dependencies are "module" and "directory" dependencies in the build.lst files. Each module has a build.lst file that lists all other modules that need to be built before this one. It also defines dependencies between the directories inside of the module.

Our central build tool is a Perl script called build.pl that can be used in two ways (all other usage variants of build.pl are workarounds that we use to overcome its shortcomings). It is able to build a single module (evaluating only the in-module directory part of the build.lst file of that module) or it can build a module as well as all module it depends on. In the first variant the build of course will work only if all of these "prerequisite" modules have been built before.

In the second variant all build.lst files of the found module prerequisites are evaluated also. Using all these build.lst files, build.pl calculates a module and directory dependency graph and performs a post-order traversal on it. This is better than the "classical" approach to hard code the order by invoking make sub processes. The module dependency graph is loop free and it allows for some dynamics and doesn't require rewriting of a hard coded order in case of changes. Header dependencies in our build system can even pass module boundaries so that in case of a correct module build order a stable build is doable – at least in theory.

In classical makefiles dependencies are bound to prerequisites and are declared next to the rules. In our build system the prerequisites are the modules and this makes building the "real" prerequisites (libraries, generated headers etc.) a side effect of the formal prerequisites. So the build system can't check if needed prerequisites are there, it relies on the developers ability to guide it through the directory hierarchy. In short words: side effects are not conductive to stability. Everybody who writes code knows this. And makefiles are code also.

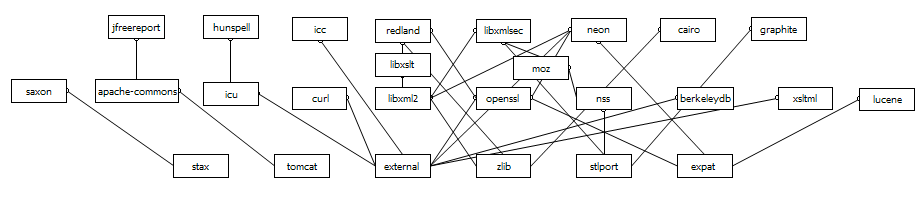

Consider the following module dependencies of external modules:

The module "lucene" has a dependency on the module "expat". If the developer had forgotten to specify this dependency in the "lucene" module's build.lst file, a full build would break only if it tried to build lucene before "neon" or "openssl". These modules depend on "expat" also and so would trigger a build of "expat". If that happened before the build would proceed to "lucene", the build would have delivered the desired prerequisites, the libraries and headers from expat, as a side effect. So it wouldn't break. But the build order of modules without a dependency between them is undefined, so it's just by chance if the build breaks or not. This problem exists for all the >200 modules in our build system. As the declaration of dependencies is not done in the same file as the declaration of the build rules, the system is more prone to inconsistent specifications than a system where both are declared in the same file and at the same place.

All in all it is practically impossible to find out if the specified dependencies are sufficient to provide all the necessary prerequisites for the targets in a particular module. The only way to check that is trying, but as it has been shown in the example, even a successful build is not a proof for complete dependencies. A necessary prerequisite might have been built as a side effect of the particular build order or because other targets, that have been built in a parallel process, had a dependency on the module delivering this prerequisite.

Back to the "classical" makefile syntax. Simply put it consists of the basic elements targets, prerequisite, build rules.

In a non-recursive build system where all of these definitions are collected in a single process, an analogue mistake to the missing dependency of the "lucene" example would be to forget to include the makefile for the prerequisites that are built in the "expat" module. Then the build tool does not know the rule to build them. If includes are done in the central makefile only, there is no possible side effect that could add this missing rule under some different conditions - a clean build including "lucene" but not including the "expat" makefile(s) will break always. So a stable build requires that the make files of all smallest build units shall be included into one central makefile. Each of these smallest build units must be tested to work in case all "external" prerequisites are present.

In conclusion the biggest problems in our build system are that its dependency level (modules) does not match the target and prerequisites level (files) and that the declaration of the targets and prerequisites is done in different files. Besides that, the other mentioned problems of recursive make systems still are present. Some of them appear in a special way and also some new problems are found:

- Directories and modules are the smallest units known to the build tool (build.pl) and in some cases this creates bottlenecks in the build. These bottlenecks lead to the mentioned mediocre ability to perform parallel builds or proceed after errors. Sometimes only one make process is running even if 4 or 8 processes have been allowed to tun.

- Only a few prerequisites (generated headers) exist for compiling C++ source files and they can be built comparably early. Without the artificial requirement that code in a module can't be built before the “prerequisite modules” have been built and all of its output and its headers have been delivered, we could run hundreds of compilation threads in parallel most of the time. We also could compile as much files as possible even if a few of them compile with errors.

- A “build –all” takes quite some time even if nothing needs to be built. This is a problem especially on Windows where file system traversal and creation of processes are more expensive than on unixoid file systems. On the same hardware and with local disk space a Windows build of OOo that just checks dependencies but builds nothing takes 3-4 times as much as a Linux build.

- The “omitting dependencies or intentionally build less” hack has two implementation in our system:

- Build single modules instead of using “build –all”. While this is fine in many cases (though a little bit cumbersome if more than one module is involved in the code change), it requires special knowledge – the developer does the job of the make tool by evaluating dependencies in his brain.

- Not taking headers of other modules into account when creating dependency files for source files. Again this works if the developer explicitly knows what needs to be rebuilt manually, but fails otherwise. This is considered to be a very bad practice. Usually a “make -t” is used to overrule make's derivation of what to build, but unfortunately the bad performance of a source tree traversal in our build system makes that unpopular.

- Manual interventions into the build process are a frequent source of errors which may lead to corrupt or incomplete builds. In result manual rebuilds (or in case the “special knowledge” about what to rebuild is not available, complete rebuilds) are required at times.

- In case the number of files to rebuild manually becomes very large, the “fix” is that the output of all modules “above” the module containing the offending change is discarded, thus building much more than necessary.

- As the makefile does not have the complete information, a “make clean” does not work well always and requires manual works done in several steps.

- In a build process with a complete set of dependencies all dependencies are evaluated only once. This means also that each file that appears in the graph is “stat”ed once only. In our system the same dependencies are evaluated again and again (if we don't use the bad hack “module internal header dependencies”). This makes the build slower, especially on Windows. It doesn't hurt a clean build that much, but it hurts the build times in the daily development turnaround builds.

How to fix that?

We tried to find ways to overcome the mentioned problems. There are some things to consider:

- "Classical" non-recursive makefiles also offer ways to add instability by giving incomplete information. As discussed in the analysis of the current build system, a clean build will work always (and only) in case all necessary makefiles have been included and so rules for all targets to be built have been specified. But if only rules have been specified completely, but some prerequisites have not been declared, rebuilds will not be executed properly, as dependencies are missing. Detecting this kind of instability is also hard, so a better build system should try to avoid that this can happen.

- Building only parts is a bad idea if the result is meant to have a quality that can be tested, but it is still a valid request if e.g. a particular file just should be compiled. A “classical” non-recursive make where the sub-makefiles can't be used separately does not allow to do that easily.

- As all makefiles are loaded into the same process, it needs a concept to keep the overview. The sub makefiles should be as simple as possible and – as all software - without a good design the build system itself may become a big ball of mud.

- In big projects people fear that throwing all dependencies, targets and rules into one process can create performance or memory problems.

- Another important point that we need to consider is that a migration path from the current to a new or improved build system is needed. We need to convert modules one by one, as we don't have the man power to convert all modules at once in a short time frame but OTOH don't want to maintain two parallel sets of makefiles over several months.

- A special problem in our build is the presence of generated headers. One might think that generated headers are just like other prerequisites, but they aren't because there are so much of them. If there was only one generated header next to a C++ source file, it could be added to the Makefile, together with the rule to generate it. But we have several thousand headers that are searched for in all include directories, so that before the header is created we not even know the exact path name of it. In our current build system we just hide that behind a recursive build where the module providing the generated headers must be built before the modules that use them. This is achieved by adding explicit module dependencies. In a build system that relies on a complete dependency tree there must be a way to insert dependencies dynamically at runtime of the make process.

Getting the dependencies right is crucial for the stability of the build. So it would be great if a build system would force the developers to add dependencies explicitly as rarely as possible and instead of that generate the necessary information automatically from the makefiles or the sources.

CMake

CMake is a build system that first just looks like a replacement for GNU autotools, in particular automake and libtool. And in fact it configures the build system in a comparable way and creates (exports to) makefiles runnable on a particular system from generic CMake makefiles. An important difference is that CMake does more than to adjust system dependent parts like libraries and path names. It also takes part in the dependency evaluation and helps to overcome the build stability problem mentioned above. As targets and dependencies and so the CMake makefiles may change over time, CMake stays in the loop and checks the build system for changes in every run. An interesting feature of CMake is that it can export to makefiles for a lot of “back ends”, not only GNU Make, but also vcproj files for building with Visual Studio. [Remark: I chose the term "exports to" as it is a one-way process; there is no way back that would merge in changes made in the "native" makefiles into the CMake makefiles.]

CMake still creates (exports to) recursive makefiles, but by evaluating the dependencies of all targets in the creation step, which itself is done in a single process, it is able to create a stable order for the recursive calls. A further improvement to our current build system is that dependencies are specified in the same files as the targets and rules. So CMake fixes the most important problem (unstable builds), but because the build itself is carried out by recursive calls, it doesn't address the other mentioned problems. So on Linux, where CMake uses GNU Make to perform the build, it does not offer any advantages over a “native” non-recursive GNU Make approach.

Switching to CMake would have some further drawbacks.

CMake builds can be started only from one dedicated directory, the working directory where all intermediate makefiles have been created and form the recursive build tree. It is possible to build a particular target, but it must be specified with the symbolic name it got in the build system and the popular build request “build all targets in one module” requires several build steps using explicit target names or some scripting. This is not an OOo specific issues, it was already criticized on the CMake mailing list by developers from other projects using CMake.

The biggest problem of a switch to CMake besides the ones already mentioned is that it does not offer a migration path for us. Switching our build system to CMake would require a one shot conversion, including parallel maintenance of makefiles for the whole duration of the switch (that is estimated to last for several months). There is no maintainable way to plug CMake builds of single modules into a build controlled by build.pl until all modules have been converted and then just add a top level CMake file on top. The CMake files we have to create will look different when called from a top level CMake file than if they are a top level makefile themselves.

On Windows CMake allows to build in a native shell and even inside the Microsoft IDE. Similar support exists for XCode on the Mac. This has not been evaluated in close detail until now, as we lacked the necessary time. First attempts revealed that even if a build worked on Linux it still needed some tweaking to let it run in the Visual Studio IDE. The "write once, debug everywhere" nightmare can't be excluded. Building in a native shell might be faster than building in a Cygwin shell, but from past experience we hesitate to go for a native Windows tool chain as a replacement for the typical Unix tools like grep, cat, tr, sed etc., tools that we will need for the multitude of custom targets in our build.

But there is another reason why building in a Cygwin shell might be better than building in a native shell. Our current plan is not to touch the packaging process (a huge system of perl scripts and configuration files) at all and just change its "launchpad" from dmake to something else. This requires to stay with Cygwin.

So it looks that for CMake builds outside of an IDE we would go for GNU Make files on all platforms, using Cygwin on Windows, but then again the benefit of CMake is not that large (the same as on Linux). An IDE could be used in the daily developer work, so this would be the situation where CMake would give the biggest benefit.

A new approach with GNU Make

We have thought about a build system that not only gives stable builds, but also overcomes the other mentioned problems. It also should still allow the popular “one module” build without tedious procedures.

As in CMake there will be a top-level makefile. But different to CMake, this makefile will not call “make” for the child make files, it will include (“load”) them. From benchmarks and measurements we concluded that this will not create scalability or memory consumption problems. For convenience reasons the included makefiles are planned to reflect the module structure of our project. But this is not required, we could create any "module" structure we wanted. The key to that ability is that we create a makefile for every target (a library, a resource file, a bunch of generated header files etc.) and then group targets together to "modules" by including the makefile of the single targets. This grouping can be changed easily later on, but for the time being going with our current modules seemed to be appropriate.

A single “make” call can be done on the top level makefile as well as on each of the module make files. The latter will evaluate complete header dependencies, but only module internal link dependencies (same as today when single modules are built), the former always will work on the complete dependency tree. Making a single target will require actions like that in CMake ("make $TARGETNAME").

With very simple additional makefiles any combination of modules can be built together. This leaves a lot of flexibility for future development.

We have chosen GNU Make for several reasons:

- the GNU tool chain has the best possible platform support

- it is well known by many people since many years

- it already has been used for non-recursive build systems successfully

- it has some features that help to work with huge and complicated dependencies:

- “eval” keyword that allows to use multiple line macros in every place

- variables that are local for a particular target

- ability to restart dependency evaluation after building targets

Here's an example that shows the value of "eval". The macro defines some variables with a prefix passed in as an argument. This doesn't work without the "eval" function.

#$(call vars, vars-prefix, file-list)

define vars

$1_foo = $(filter %.foo,$2)

$1_bar = $(filter %.bar,$2)

endef

#doesn't work: $(call vars,myvars,ooo.foo ooo.bar oops.foo oops.bar)

$(eval $(call vars,myvars,ooo.foo ooo.bar oops.foo oops.bar))

show-vars:

# $(myvars_foo)

# $(myvars_bar)

It shows the result:

$ make

# ooo.foo oops.foo

# ooo.bar oops.bar

So "eval" allows for much more powerful macros.

The classical way to write makefiles is specifying targets, their prerequisites (thus creating dependencies on them) and rules how to build the targets from the prerequisites. Even in a non-recursive build system it is quite possible to add instability (build works by luck in one scenario, but breaks in another one) by forgetting to specify dependencies. Our approach uses a higher abstraction level that avoids these kinds of mistakes. The mentioned special GNU Make features enabled us to implement such an approach. Especially the “eval” function is extremely helpful.

“eval” is a comparably new feature in GNU Make (it was added in version 3.80). Its purpose is to feed text directly into the make parser. This allows to overcome the limitation that macros are not allowed to expand to multiple lines at the top parsing level. An “eval” always expands to zero lines, regardless of the number of lines the “called” macro has. “eval” also allows to dynamically feed the parser from arbitrary sources, not only with what is written down in the makefile at execution time.

In this new build system, that now exists as a prototype on Linux and Windows and is in the works for Mac OSX and Solaris, a developer does not explicitly write down the dependencies and rules, he requests a service from the build system, e.g. “add a library to the build”, “add a C++ object file to a library”, “add a link library prerequisite to a library”. Each of these service operations is written as a macro that adds the necessary targets, creates the necessary dependencies and provides the corresponding rules. Requesting these services is done by evaluating macro calls (“$(eval $(call MYMACRO( MYPARAMS)))”). Most of the time a developer shouldn't be forced to add dependencies manually. [Remark: our current prototype uses these "raw" macro calls. If we wanted, we could wrap them behind a more user friendly syntax. Even a makefile generator or a declarative wrapper are possible.]

A particular problem in our builds is header generation. Some headers are created from other files (sdi, idl). We also “deliver” headers from their original location to a commonly accessed place. This is just another kind of header generation, the delivered headers are created by copy operations. So we can say that every header that is not taken from inside a module is a generated one. The new build system approach automatically adds dependencies that ensure that such headers have been created before the sources including them will be built. It does not require to specify a dependency for that explicitly, the build system takes this information from other targets and prerequisites (e.g. the libraries a target library links against) and takes care for them in the corresponding requested build system service.

As the new system still works on modules, it is possible to build only single modules with GNU Make while still all other modules are built with dmake – we have a very simple migration path. We can convert one module after another and kick build.pl out once the last makefile.mk has been replaced by a GNU Makefile.

Comparison of GNU Make and CMake based build system

We developed two prototypes with CMake and a build system based on GNUMake that were able to build parts of OOo. We mainly looked on the C/C++ part of our sources. CMake already has built-in targets for them, for the GNUMake system we developed a set of macros that cover rules, prerequisites and dependencies. The project make files for the two systems have a comparable style and complexity.

We have a lot of other targets to build, but their dependencies and rules usually are not very complicated and they don't add as much to the depth of our dependency tree and the total build time as the C/C++ sources. They also are much less platform dependent than the compiling and linking of C++ sources. This stuff would be "custom targets" in CMake where all rules have to be defined by the developers, so it wouldn't be less work in CMake than in GNUMake. Both prototypes already contain some of them.

It seems that in case of custom targets in CMake dependencies always must be specified explicitly. In the GNU Make based system we can apply the same concepts that we used for C++ sources and that enabled us to specify dependencies inside the macros. Here the GNU Make based approach uses a higher abstraction level for the user provided makefiles. This allows to prevent a lot of possible errors that can happen if makefiles are written in the "classical" way. We didn't see a way to implement something similar with CMake as it lacks some of the necessary features.

The new GNU Make based build system will solve all of the listed recursive-make problems. In case it disables current working procedures, it offers usable workarounds (e.g. using “make -t” to avoid builds without full dependencies). In short words:

- it creates stable builds with reliable dependencies;

- it allows optimal parallelization;

- it always builds as much as possible even if build breakages happen and therefore minimizes the number and length of compile-fix-recompile cycles;

- it shortens the time for “do nothing” builds as much as possible as it minimizes the number of file stats (this effect is already visible by just using a few modules);

- rebuilds just work in the shortest possible time even without special knowledge.

The CMake system solves the stability problem, but it doesn't solve it as complete as the GNU Make based system because it requires to add dependencies manually much more often. So it is still possible to have builds running by luck just because the prerequisite behind a missing dependency was accidentally built by another part of the build tree before.

We don't have a migration path for CMake, but we have a perfect and simple one for the GNU Make build system.

CMake makes it harder to work in the module based way that many developers are used to. There is no problem to do that with our GNU Make based system.

CMake gives a benefit for the daily developer work on Windows. OTOH it seems probable to work with an IDE and the GNU Make based build system also (using the “external build tool” feature of the IDE). We need more time to evaluate how to provide a solution that is ready to use without manual tweaking.

In Hamburg we already build from more than one code repository into a common output directory. In future we might want to split our code repository into even more pieces, but the basic problem already exists now where we have only two of them.

In our GNU Make based build system we can choose the top level directories and make all other paths relative to it. We have a source directory, a working directory (that will contain all generated files and so replaces the module local output folders) and an output directory (nowadays called “solver”). Every makefile that has access to the folder containing the build system macros can deliver into the same output directory. So we don't see a problem to adjust the build system for the requirement of several source roots in combination with several final products generated from a common working directory. We also maintain the module structure of our current build system that already has proven to be able to cope with several source trees.

CMake sets some additional hurdles as there always is a "root" CMakeLists.txt that controls the others that themselves can't be used standalone. This “one makefile tree, one workdir, one project” approach requires that we will have several root makefiles that will have some common content. CMake also somewhat destroys the module oriented system we have nowadays as the makefiles can't be used separately. So all in all it can be expected that CMake will create more problems on the way, though it does not seem to be impossible. It's hard to quantify that without trying.